I Built a Prompt That Extracts Trading Strategies from Academic Papers — and Found the Best AI Tool for the Job

The exact prompt I use, plus a live example you can steal.

And I can say, without hesitation:

The hardest part isn’t reading the paper.

It’s extracting the strategy.

Finding the exact logic the author used.

Understanding where the data came from.

Decoding performance metrics buried across seven different tables with footnotes in size 9 font.

It takes time. A lot of it.

And organizing that knowledge so you can revisit and reuse it?

Takes even more.

Then one day…

You meet a Large Language Model…

And it does in seconds what used to take hours.

Because at the end of the day, you’re only after two things:

Does this paper contain edge?

And if so, what’s the strategy?

And somewhere deep inside a 31-page PDF with 97 references, three appendices, and more footnotes than a Tolkien novel…

Lies a paragraph.

Just one.

And that paragraph holds the treasure:

"We go long when [x] happens."

"We exit when [y] crosses [z]."

"Returns spike during [Q1–Q2] windows."

But first, you’ll wade through:

A half-page explaining Fama-French factors

A meditation on log-volatility clustering

An equation that looks like it escaped from CERN

You skim. You squint. You Ctrl+F "strategy" like a madman.

You study the charts hoping they labeled something “entry” or “exit” or “rules”…

Nothing.

You didn’t open this paper to study econometrics.

You just wanted one good idea worth testing.

A setup.

A signal.

A shot at something real.

Happens to me all the time.

I love academic research.

But I don’t love spending 90 minutes chasing one usable paragraph.

So I did what any lazy-but-hopeful trader would do:

I built myself a research intern.

Unpaid. Unfed. Always available.

His name is RogueQuantPapers

I give him the paper. He gives me the logic.

And today I’m going to show you how to do the same.

And hey, if diving into the world of research prompts isn’t your thing…

You can still benefit from it.

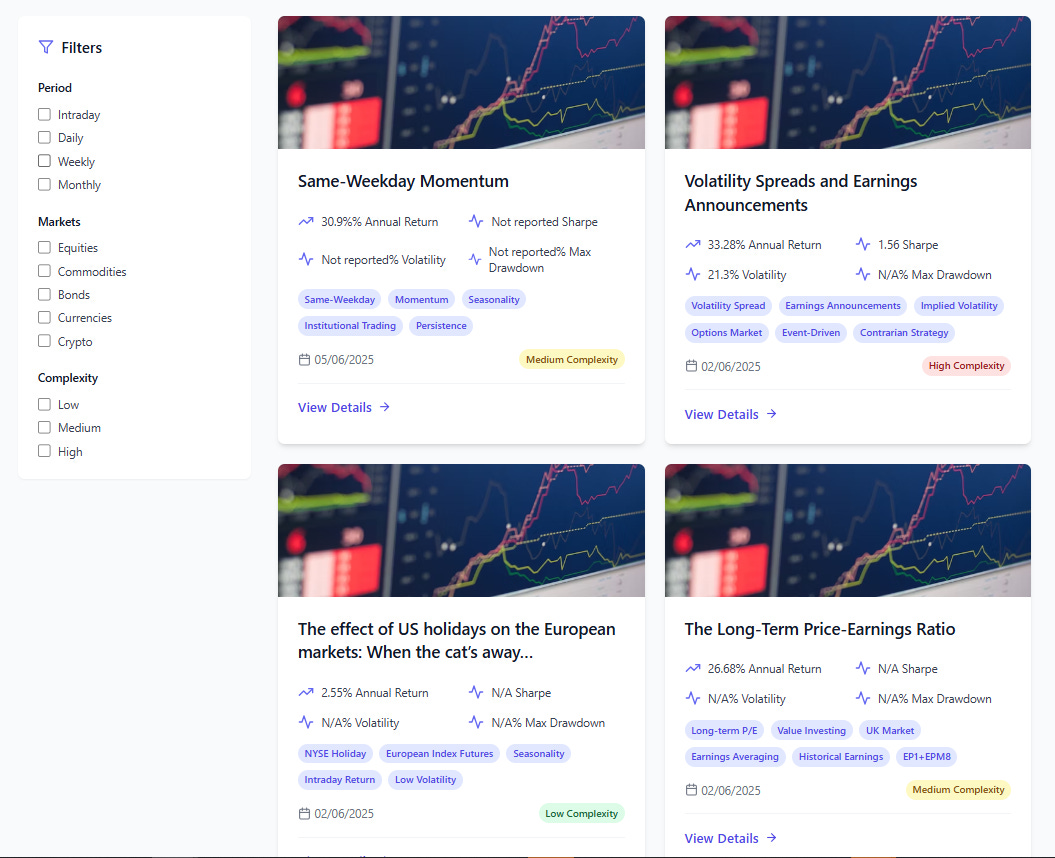

Annual subscribers get full access to my private app:

a curated library of hundreds of academic trading papers.

Each one is organized, summarized, and (most importantly) has the core strategy logic already extracted.

No fluff, just the juice, the testable ideas.

Here’s a screenshot from the app:

And I’m actively adding Python code to many of them so you can test strategies with your own data.

The Real Problem

We don’t lack ideas.

We lack time (especially if you have kids and want to see them grow)

Academic papers are (potentially) full of edge.

But buried under 12 pages of citations and academic throat-clearing.

You open one with hope.

You end up elbow-deep in factor models and regression scaffolding.

And somewhere in there (maybe) is a tradable concept.

But most traders will never find it.

Not because they’re lazy.

Because they’re overwhelmed.

And even if you do find the setup?

You might misread it.

Miss a condition.

Build something wrong.

All from one poorly phrased paragraph.

That’s the tragedy:

Hours burned. Brain fried. Still no code.

Nobody’s giving you points for reading PDFs.

Only for building things that work.

So let’s fix that.

The Shortcut

That’s why I leaned hard into LLMs to handle the heavy lifting.

But here’s the thing:

Extracting strategy logic from academic papers isn’t easy.

You’ve got tables everywhere (which one holds the actual Sharpe again?)

Dozens of parameter combinations

Ambiguous language that sounds smart but says very little

If you’ve ever read a trading paper, you know exactly what I mean.

And just so you know, I’m not new to this.

During my master’s degree (which, by the way, was entirely focused on algorithmic trading), I read dozens of academic papers.

And even with all that practice, I’m still slow at finding and extracting what really matters.

Because it’s not just about reading.

It’s about decoding.

Buried logic, inconsistent terminology, scattered performance tables…

It’s a mess even for someone who knows where to look.

So a few months ago, I started experimenting with prompts to extract the good stuff.

After dozens of iterations, I built one that gives me exactly what I need 99% of the time.

And that’s the prompt I’m sharing with you today.

Real Example

Before I show you the prompt…

Let me walk you through a real-world case study.

A few days ago, my friend Quantitativo shared an interesting paper in a Note.

Nice, right?

That note caught my attention.

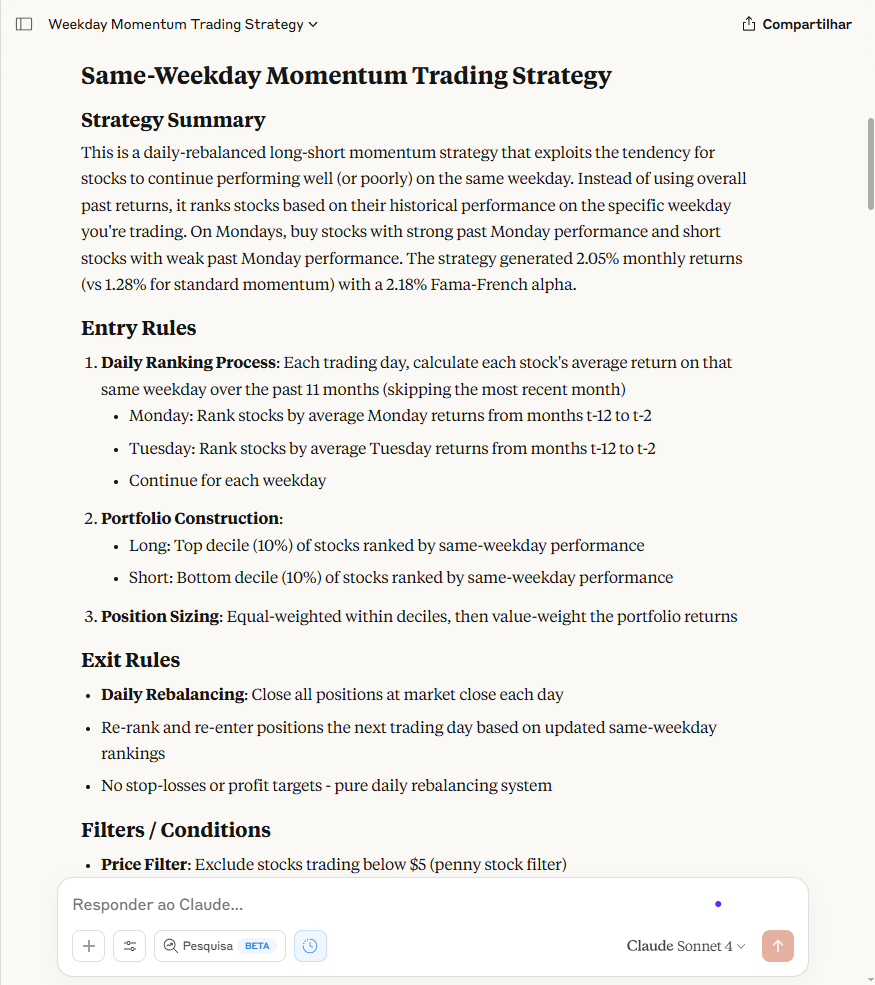

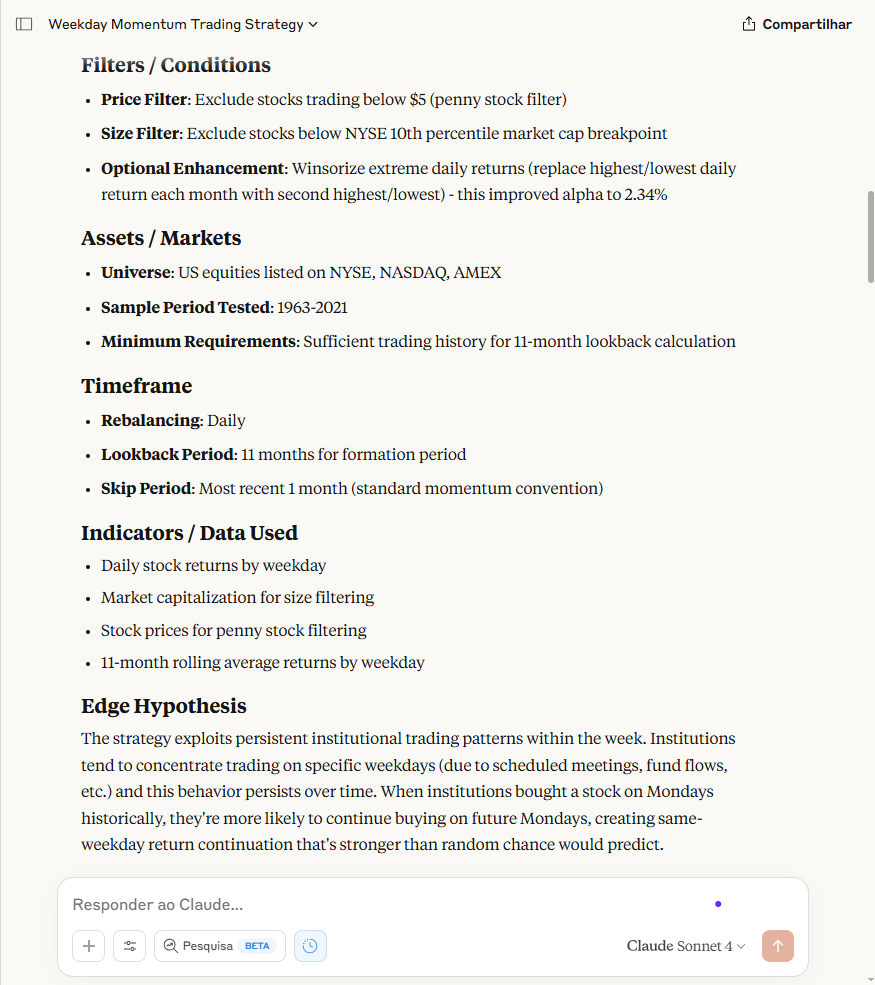

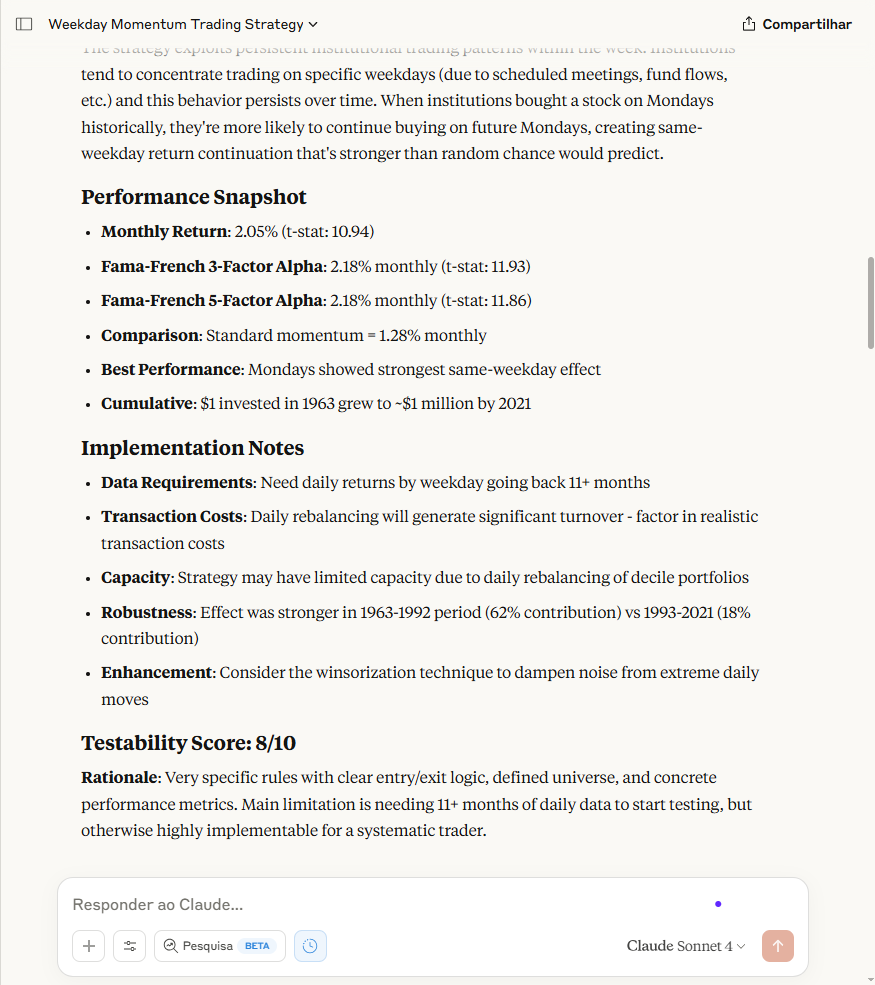

So I downloaded the academic paper PDF, dropped it straight into my prompt and here’s what came back:

Pretty clean, right?

From here, you could go one step further:

Ask the LLM to write the code and even run a backtest for you.

But that’s a topic for another post.

And yes, I tested this prompt with six different LLMs…

The top performers?

Notebook LLM - my favorite (doesn’t hallucinate)

Claude

ChatGPT

That said, all six gave solid results, good enough to use.

Now, let’s get to the good part.

The prompt…